Adding alerts across your monitoring tools is taking a proactive approach to reliability. But if there are too many alerts, then it can become counterproductive because team members will start ignoring alerts or remove the alerting altogether. Which is why you need a systematic approach to adding alerts and dealing with them.

How many alerts is too many?

To understand if your team is getting too many alerts, you should begin with deciding the maximum number of alerts that your team members can handle in a given shift. This can be based on the workload of the team members and the average time needed to deal with alerts. For example, Google targets for a maximum of 2 alerts in a 12 hour shift.

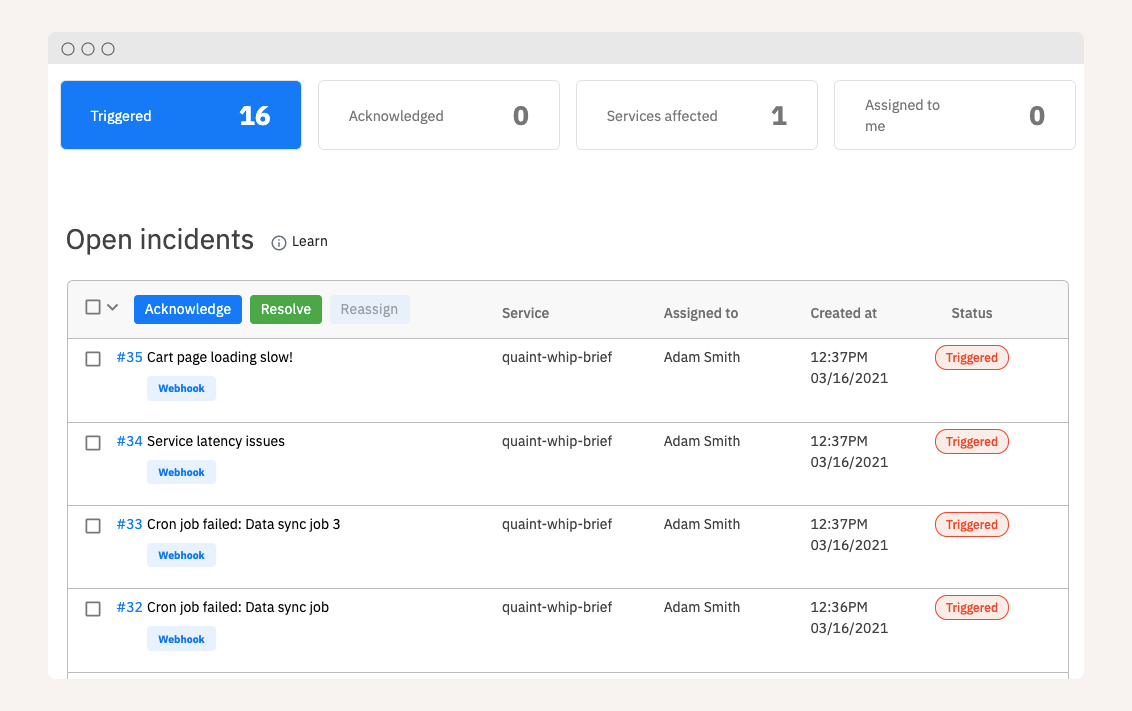

Once you have set a target, start tracking the number of alerts and bringing them up during your regular team meetings. If alerts start crossing the maximum threshold regularly, you should discuss spending more time on fixing the causes of the alerts.

Dealing with alert noise

Here are some ways of dealing with alerts.

- To reduce the alerts due to bugs, you should aim for removing as many bugs as possible before software is in production. Perform both manual and automated testing before pushing new changes to production. Some bugs only show up when the system is under high load, so you should also perform load testing to surface such bugs.

- To make your system more resilient, you can also start doing chaos testing or holding game days.

- One way to reduce bugs is to do a canary release, where a new feature or code is launched to a small set of people and observed for bugs before releasing to everyone.

- Before adding a monitoring alert, make sure that the alert is actionable and has clear steps for dealing with the alert. Ideally, you should add these steps in docs or playbooks for any new alert so that it is available for team members dealing with the alert.

- Send phone calls for alerts only if it’s urgent. Otherwise, the alert should just create a ticket which can be handled by the team later.

- Where possible, alerts should be auto resolved, either by handling proper events from the alerting source, or adding automated actions to resolve the alert.

- Each new alert should be reviewed to understand if it will cause noise in the future.

- Each alert should be investigated to find the root cause and you should try to fix the cause so that alert does not happen again.

- Have a process for finding root cause of alerts and fixing the cause before moving on.

If you would like to know how to set up the right alerts, drop a line to us at [email protected].